There’s a lot of numbers up there, but let’s see if we can summarize some conclusions from this.

Looking at the entire pipeline as one overall energy measurement, we can see that the variability (standard deviation % of energy consumed) is large and spans a wide margin: anywhere from 4% - 16%. However when we break it down to installation / running steps, we notice a drastic split - the installation step consistently has a much wider variability (6 - 105(!!)%), while the run sysbench step has a much more narrow variability (0-9%).

Looking through the job logs for the installation step it becomes apparent that network traffic speeds accounts for quite a bit of this variability. Jobs whose package downloads were slower (even if they’re the same packages) took an expectedly longer amount of time. This explains the time variability, and corresponding energy variability we see.

This highlights the importance of breaking down your pipeline when making energy estimations for the purposes of optimizing gains. You generally do not have much control over network speeds, though you can try to minimize network traffic. Fortunately, if we look at the energy breakdown, we can see that both the energy consumed and cpu utilization were lower across the board for the install steps. So while these sections have a large variability, they also account for a minority of the energy cost.

Looking at just the running steps, which accounts for the majority of the energy cost, we notice two things. First - the energy standard deviation % and time standard deviation % are almost identical in most cases (Gitlab’s EPYC_7B12 being the odd one out, though the two numbers are still comparable). This means that we have a pattern here that the longer a job takes, we have a proportionally larger energy cost - which is what we would expect.

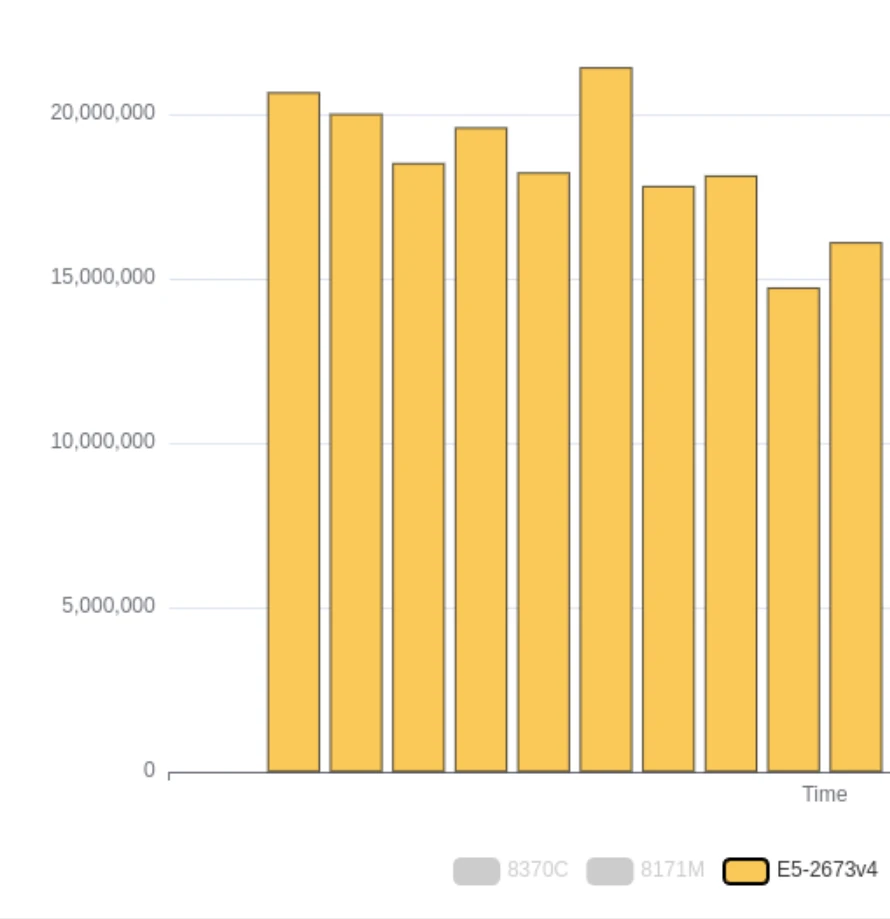

We also notice that the baseline standard deviation we are calculating here seems to be very CPU dependent. Certain CPU’s such as the 8370C and 8272CL seem to perform more consistently than others. Their standard deviation is very low - 0-1%.

Running these tests a few times over a few weeks, these patterns regarding CPU still held.